BackdoorArts

Senior Member

Since I first learned about the differences between DX and FX cameras (which, to my great embarrassment was not until after I purchased my first DSLR) the mathematics explaining the differences between them has always been a little confusing to me. For example, they say the DX sensor has a 1.5X Crop Factor, meaning that lenses have an equivalent focal length 1.5x, of 50% larger, than the 35mm equivalent listed on the lens. So a 300mm lens will act like a 450mm lens on a DX body.

Well, that’s cool, but why is it that when you shoot with an FX body in DX mode, when you get the 50% boost in focal length why do you lose a lot more than 50% in resolution? I mean, if I have 36MP’s in my D800 why is it that I don’t get a 24MP DX image and instead get only around 16MP, and only a little more than 10MP on my 24MP D600?

It’s really not all that confusing it you lay it out properly.

An FX sensor is 36mm x 24mm (43.3mm on the diagonal). A DX sensor is 23.7mm x 15.7mm (28.4mm on the diagonal), which for the sake of this example and all the math involves we’ll round up to 24mm x 16mm (28.8mm on the diagonal).

Measured on the diagonal the FX sensor is 50% larger (calculated as ((43.3 - 28.8) / 43.3) x 100) to yield percentage) than the DX equivalent, or 1.5 times as big. This is where the 1.5X Crop Factor comes in, as optically the focal length equivalence is an inverse function of this measure alone. But focal length is the only measure dependent on that ratio - all the rest have to do with the total area of the sensor and not just a single dimension.

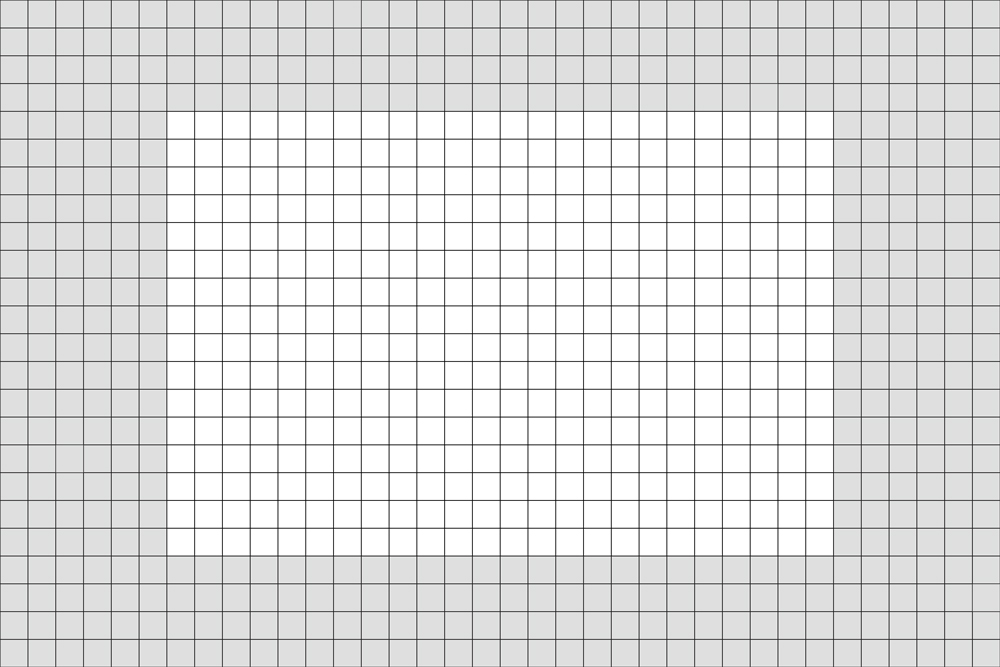

Take a look at the following grid. Assume each box is 1mm x 1mm, or one square millimeter. The DX sensor is represented by the white boxes (24 across and 16 down), while the FX sensor is represented by the combination of the white boxes and the grey boxes (36 across and 24 down).

Multiplying length times width, total surface area of each sensor is as follows:

FX: 36 x 24 = 864 sq. mm (i.e. 864 boxes in the grid)

DX: 24 x 16 = 384 sq. mm (i.e. 384 boxes in the grid)

That means the DX sensor is much more than 50% smaller, it is 44.4% the size of an FX sensor (384 / 864). So, when you stick that FX camera in DX mode, you are now using only 44.4% of the sensor!!

Let’s look at the practical numbers. A D800 has 36 evenly distributed MP’s across the FX sensor. The DX portion of the sensor contains 44.4% of them, or approximately 16MP’s total. In other words, if you distribute the pixels evenly into each box in the grid you’ll get about 41,667 pixels per box, and 41,667 pixels in 384 boxes gives you 16,000,128 pixels, or 16MP’s.

That’s why shooting in DX mode on an FX camera gives you a smaller image than you might otherwise expect.

We can also use this to understand why FX cameras may be much better low light performers, while DX cameras might be much better for shooting things like wild life where resolution is so important.

Let’s look at two 24MP sensor cameras, the D610 and the D7100. For the sake of the math, let’s assume that all factors outside of pixel size are equal, just for discussion (remember this is about the math and not meant to compare any specific data).

On the D610, the 24 million pixels are distributed across a 36mm x 24mm sensor, while on the D7100 the 24 million pixels are distributed across a 24mm x 16mm sensor. So, doing the math…

D610: 24,000,000 pixels / 864 sq. mm = 27,778 pixels per sq. mm

D7100: 24,000,000 pixels / 384 sq. mm = 62,500 pixels per sq. mm

That’s 225% more pixels per sq. mm on the D7100.

But what does that mean?!

Well, from a resolution perspective, it means that for every 1mm of sensor you’re getting 225% more pixels on the D7100. This can be critically important when you’re shooting wildlife that may not occupy the entire frame, because you have 225% more information in that small portion of the sensor. So, when it comes to trying to see feather detail on a bird or fur on an animal, there’s a strong chance that you’ll get what appears to be a sharper cropped image from the D7100 than you would from a D610.

But this also comes at a cost, since in order to squeeze the same number of pixels into 44% of the space each pixel must be much smaller. How much smaller? If there are 27778 pixels in 1 mm on the D610 then each pixel is 1/27778th of a mm, or 0.000036mm in size. On the D7100, that’s 1/62500th of a mm, or 0.000016mm. Do the division and you’ll see that the D7100 pixies are 44% smaller than those of the D610 (there's that number again). Which means that when each sensor is exposed to light for the same amount of time, the amount of light information available to each pixel is much greater on the FX camera due to their larger size. This is why FX sensors tend to be so much better in low light situations (there is a lot of optical math that goes into precisely why, but suffice it to say that bigger is better where light information is concerned, again all other things being equal - if you're curious, Google the precise math).

So, there you have it. A basic tutorial on FX vs. DX math and a new constant for you to memorize to go with the 1.5X focal length number - 44.4%.

Well, that’s cool, but why is it that when you shoot with an FX body in DX mode, when you get the 50% boost in focal length why do you lose a lot more than 50% in resolution? I mean, if I have 36MP’s in my D800 why is it that I don’t get a 24MP DX image and instead get only around 16MP, and only a little more than 10MP on my 24MP D600?

It’s really not all that confusing it you lay it out properly.

An FX sensor is 36mm x 24mm (43.3mm on the diagonal). A DX sensor is 23.7mm x 15.7mm (28.4mm on the diagonal), which for the sake of this example and all the math involves we’ll round up to 24mm x 16mm (28.8mm on the diagonal).

Measured on the diagonal the FX sensor is 50% larger (calculated as ((43.3 - 28.8) / 43.3) x 100) to yield percentage) than the DX equivalent, or 1.5 times as big. This is where the 1.5X Crop Factor comes in, as optically the focal length equivalence is an inverse function of this measure alone. But focal length is the only measure dependent on that ratio - all the rest have to do with the total area of the sensor and not just a single dimension.

Take a look at the following grid. Assume each box is 1mm x 1mm, or one square millimeter. The DX sensor is represented by the white boxes (24 across and 16 down), while the FX sensor is represented by the combination of the white boxes and the grey boxes (36 across and 24 down).

Multiplying length times width, total surface area of each sensor is as follows:

FX: 36 x 24 = 864 sq. mm (i.e. 864 boxes in the grid)

DX: 24 x 16 = 384 sq. mm (i.e. 384 boxes in the grid)

That means the DX sensor is much more than 50% smaller, it is 44.4% the size of an FX sensor (384 / 864). So, when you stick that FX camera in DX mode, you are now using only 44.4% of the sensor!!

Let’s look at the practical numbers. A D800 has 36 evenly distributed MP’s across the FX sensor. The DX portion of the sensor contains 44.4% of them, or approximately 16MP’s total. In other words, if you distribute the pixels evenly into each box in the grid you’ll get about 41,667 pixels per box, and 41,667 pixels in 384 boxes gives you 16,000,128 pixels, or 16MP’s.

That’s why shooting in DX mode on an FX camera gives you a smaller image than you might otherwise expect.

We can also use this to understand why FX cameras may be much better low light performers, while DX cameras might be much better for shooting things like wild life where resolution is so important.

Let’s look at two 24MP sensor cameras, the D610 and the D7100. For the sake of the math, let’s assume that all factors outside of pixel size are equal, just for discussion (remember this is about the math and not meant to compare any specific data).

On the D610, the 24 million pixels are distributed across a 36mm x 24mm sensor, while on the D7100 the 24 million pixels are distributed across a 24mm x 16mm sensor. So, doing the math…

D610: 24,000,000 pixels / 864 sq. mm = 27,778 pixels per sq. mm

D7100: 24,000,000 pixels / 384 sq. mm = 62,500 pixels per sq. mm

That’s 225% more pixels per sq. mm on the D7100.

But what does that mean?!

Well, from a resolution perspective, it means that for every 1mm of sensor you’re getting 225% more pixels on the D7100. This can be critically important when you’re shooting wildlife that may not occupy the entire frame, because you have 225% more information in that small portion of the sensor. So, when it comes to trying to see feather detail on a bird or fur on an animal, there’s a strong chance that you’ll get what appears to be a sharper cropped image from the D7100 than you would from a D610.

But this also comes at a cost, since in order to squeeze the same number of pixels into 44% of the space each pixel must be much smaller. How much smaller? If there are 27778 pixels in 1 mm on the D610 then each pixel is 1/27778th of a mm, or 0.000036mm in size. On the D7100, that’s 1/62500th of a mm, or 0.000016mm. Do the division and you’ll see that the D7100 pixies are 44% smaller than those of the D610 (there's that number again). Which means that when each sensor is exposed to light for the same amount of time, the amount of light information available to each pixel is much greater on the FX camera due to their larger size. This is why FX sensors tend to be so much better in low light situations (there is a lot of optical math that goes into precisely why, but suffice it to say that bigger is better where light information is concerned, again all other things being equal - if you're curious, Google the precise math).

So, there you have it. A basic tutorial on FX vs. DX math and a new constant for you to memorize to go with the 1.5X focal length number - 44.4%.

Last edited: